FAQ

- How diversity can induce complexity?

- By assumption of the simplicity bias the model learned by default lies at one end of the space of solutions.

- Why use input gradients to quantify diversity?

- \cite{selvaraju2017grad} show input gradients are indicative of the features used by the model.

- Furthermore

- See more in Appendix A:

- Where to split a model into “feature extractor” and “classifier”?

- Why not design the diversity regularizer on the activations of the models but on the input gradients?

- Is the introduction of more diversity just a fancy random search?

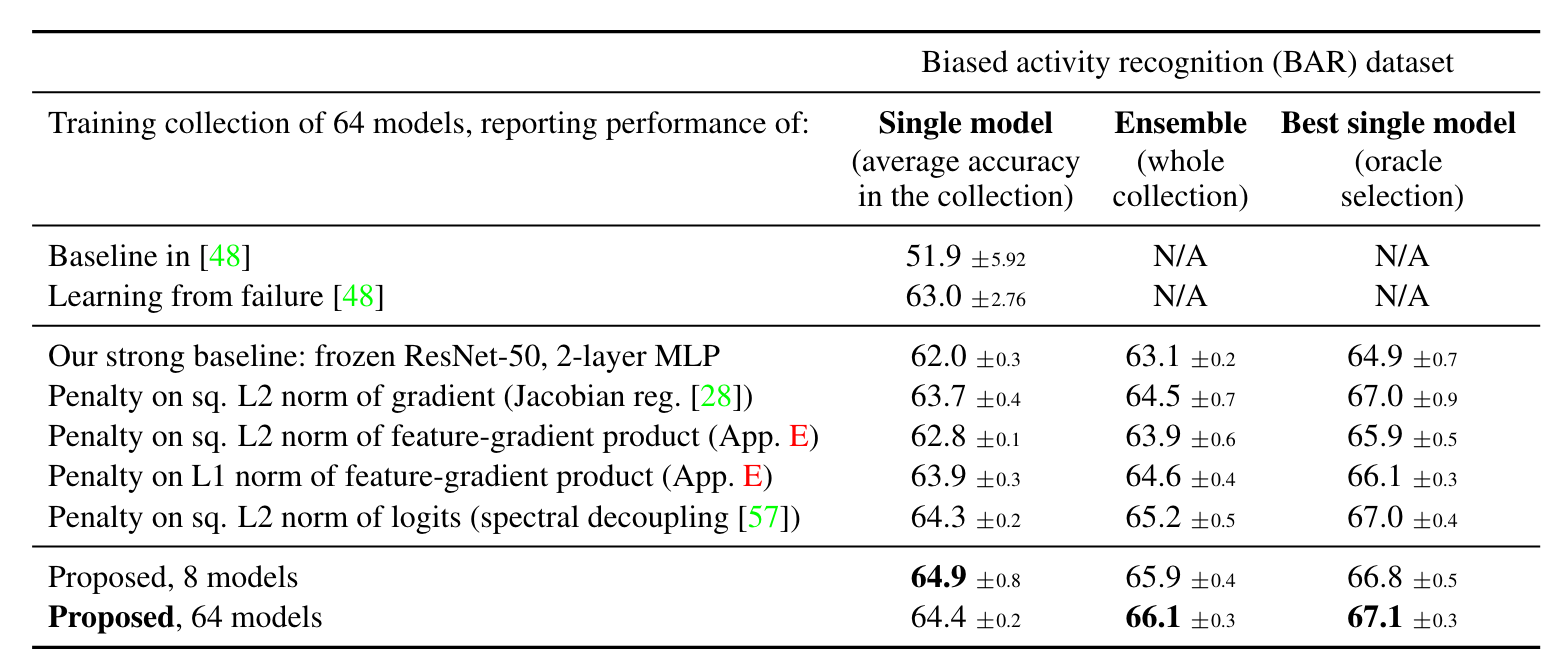

Biased activity recognition

- This experiment try to figure out: Are these patterns relevant for OOD generalization in computer vision tasks?

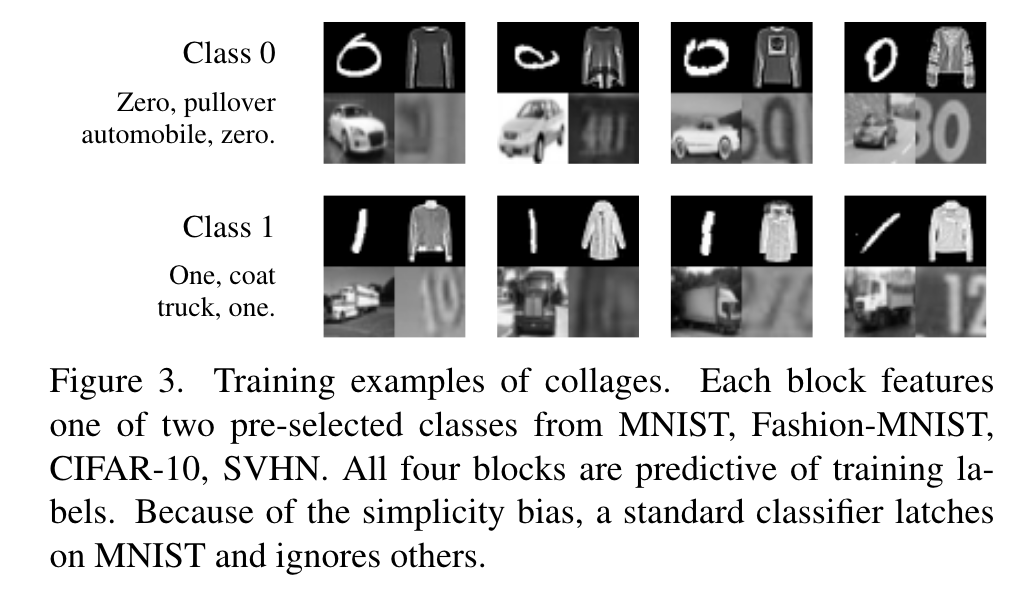

Multi-dataset collages

- This experiment try to figure out: Can we learn predictive patterns otherwise ignored by standard SGD and existing regularizers?

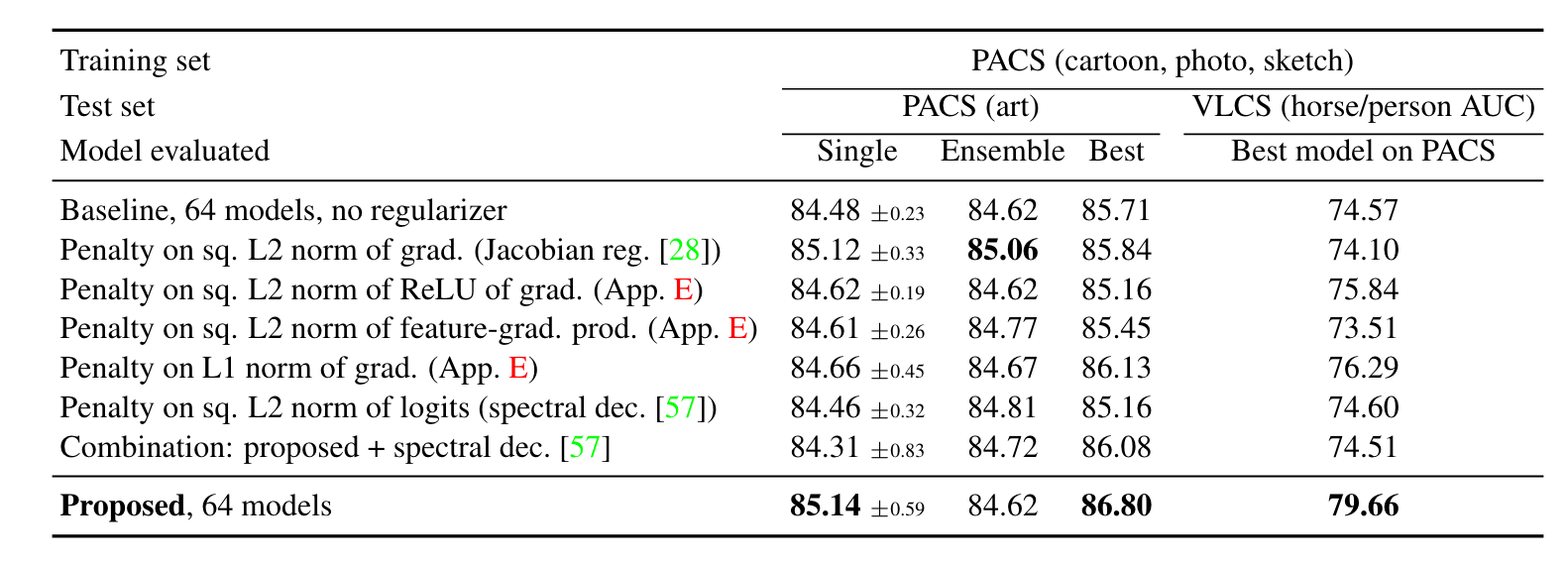

Domain generalization

- PACS dataset is a standard benchmark for visual domain generalization (DG). PACS contains 4 domain(Art, Cartoon, Photo and Sketch) and each domain contains 7 categories.

- VLCS is included for an additional cross-dataset evaluation i.e. zero-shot transfer.

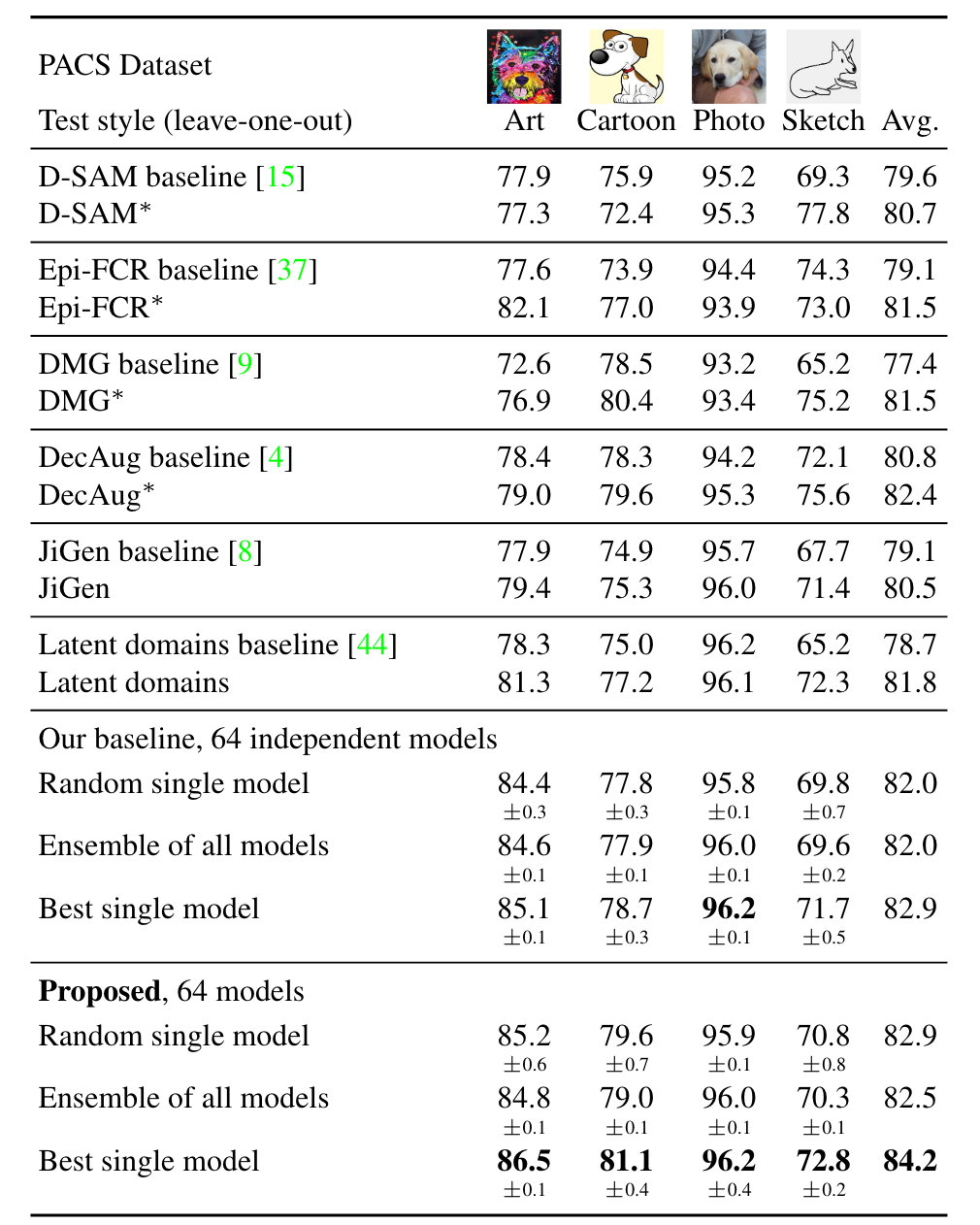

Domain generalization

Proposed method compared with existing methods on PACS.

Discussion

- Limitations of the method

- The main hyperparameters(the regularizer strength and the number of models learned) setting give no guarantees.

- Model fitting and model selection are equally hard?

- In this approach the two steps can be completely decoupled.

- Universality of inductive biases

- The inductive biases of any learning algorithm cannot be universally superior to another’s.

- This method does not affect inductive biases in a directed way. It only increases the variety of the learned models, so it could be seen as a “meta-regularizer”.

- Experiments also show that intuitive notions behind classical regularizers like smoothness (Jacobian regularization), sparsity (L1 norm), or simplicity (L2 norm) are sometimes detrimental.

References

- Selvaraju, R. R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., and Batra, D. (2017). Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE international conference on computer vision, pages 618–626.

- Shah, H., Tamuly, K., Raghunathan, A., Jain, P., and Netrapalli, P. (2020). The pitfalls of simplicity bias in neural networks. Advances in Neural Information Processing Systems, 33:9573–9585.

- Teney, D., Abbasnejad, E., Lucey, S., and Van den Hengel, A. (2022). Evading the simplicity bias: Training a diverse set of models discovers solutions with superior ood generalization. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 16761–16772. Presenter: Yang Zhang SDS, Fudan University August 4, 2024 13 / 14